Leave-one-out Cross-validation Which Model Performs Best

Consider a dataset with N points. The parameter optimisation is performed automatically on 9 of the 10 image pairs and then the performance of the tuned.

A Quick Intro To Leave One Out Cross Validation Loocv

This CV technique trains on all samples except one.

. This is a variant of LPO. Gd_srfit X_train y_train This method can take some time to execute because we have 20 combinations of parameters and a 5-fold cross validation. Just emphasising this point.

I successfully built a model with high predictive ability. The leave-one-out cross-validation method or simply leave one out LOO is often used to assess model performance or to select among competing models when the sample size is small. Once the GridSearchCV class is initialized the last step is to call the fit method of the class and pass it the training and test set as shown in the following code.

The rest of the dataset will be used for training and the single data point will be used to predict after training. I need to perform leave-one-out cross validation of RF model. Split a dataset into a training set and a testing set using all but one observation as part of the training set.

In machine learning and data mining k-fold cross validation sometimes called leave-one-out cross-validation is a form of cross-validation in which the training data is divided into k approximately equal subsets with each of the k-1 subsets used as test data in turn and the remaining subset used as training data. For 10-fold cross-validation your validation set consists of 110 of the data set. The output numbers generated.

The AIC is 4234. I successfully built a model with high predictive ability. Leave one out cross-validation LOOCV.

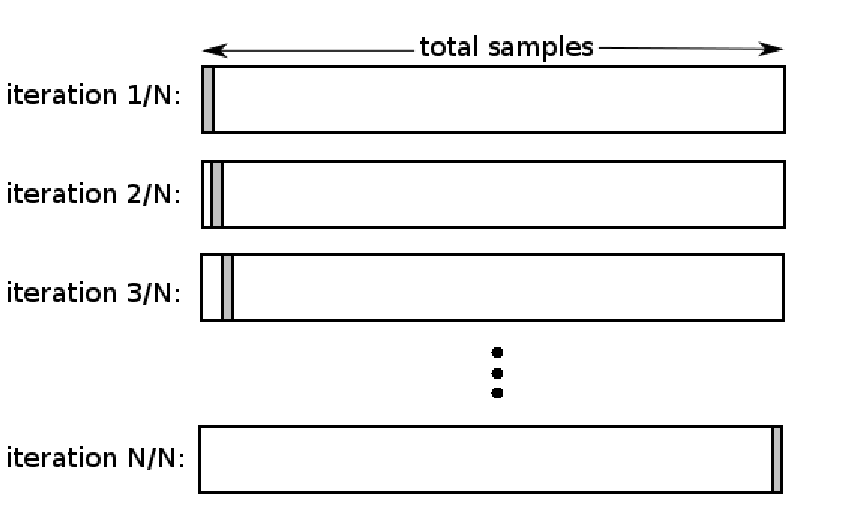

There are 3 main types of. LOOCV Leave One Out Cross Validation In this method we perform training on the whole data-set but leaves only one data-point of the available data-set and then iterates for each data-point. This is where the method gets the name leave-one-out cross.

The Leave One Out Cross-Validation LOOCV approach has the advantages of producing model estimates with less bias and more ease in smaller samples. Leave-one-out Cross-validation LOOCV is one of the most accurate ways to estimate how well a model will perform on out-of-sample data. In LOO one observation is taken out of the training set as a validation set.

It is a category of LpOCV with the case of p1. Leave-One-Out Cross-Validation in R With Examples To evaluate the performance of a model on a dataset we need to measure how well the predictions made by the model match the observed data. Cross-validation or k-fold cross-validation is a procedure used to estimate the performance of a machine learning algorithm when making predictions on data not used during the training of the model.

Cross validation usually works by repeatedly selecting a subset of the data to leave out fitting a model using the data that was not left out and then evaluating the estimated model using the left out data. We use validation to help in selecting hyper parameters. We will train the model without this validation set and later test whether it correctly classify the observation.

LOOCV Model Evaluation. Leave-one-out cross-validation LOOCV is an exhaustive cross-validation technique. Leave one out vs.

Cvscross_val_scorebest_clf features_important y_train scoringr2cv mycv mean_cross. The best one is chosen out of it and is used on the test set. However the model choice is typically made using only the testing dataset which can be misleading by favouring unnecessarily complex models.

Source LOOCV operations. Over the course of our collaboration with medical oncologists and laboratory biomarker scientists across phase II clinical trials exploring 35 biomarkers we have developed and implemented a SAS software macro. It has some advantages as well as disadvantages also.

Going with this option vs. Model Show activity on this post. Popular Answers 1 Leave 1 out cross validation works as follows.

Monte-carlo cross-validation leave-one-out-cross-validation loocv k-fold-cross-validation stratified-cross-validation hold-out-cross-validation. Machine Learning CrossValidation Gives Different Result. Training validating and testing.

The cross-validation has a single hyperparameter k that controls the number of subsets that a dataset is split into. The leave-one-out method of cross-validation uses one observation from the sample data set to be used as the validation data using the remaining observations as training data. What you just said is dividing the data into three parts.

So in short cross-validation is a model evaluation method. In LOOCV instead of leaving out a portion of the dataset as testing data we select one data point as the test data. For the specialized cases of ridge regression logistic regression Poisson regression and other generalized linear models.

Leave-One-Out Cross Validation. This project aims to understand and implement all the cross validation techniques used in Machine Learning. When p 1 in Leave-P-Out cross-validation then it is Leave-One-Out cross-validation.

Updated on Jan 21. The ageglm model has 505 degrees of freedom with Null deviance as 400100 and Residual deviance as 120200. Each model is used to predict the value of the validation data point by fitting the training data then a prediction error is calculated by subtracting the predicted value from the validation.

Cross validation depends mainly on how many number of samples you have. The first error 2502985 is the Mean Squared ErrorMSE for the training set and the second error 2502856 is for the Leave One Out Cross ValidationLOOCV. An advantage of using this method is that we make use of all data points and hence it is low bias.

Note that we only leave one observation out from the training set. In addition to that cross-validation is not only used. K is often 10 or 5.

For a dataset having n rows 1st row is selected for validation and the rest n. Leave-one-out cross-validation uses the following approach to evaluate a model. You then repeat for all folds.

It is a K-Fold CV where K N where N is the number of samples in the. I need to perform leave-one-out cross validation of RF model. One commonly used method for doing this is known as leave-one-out cross-validation LOOCV which uses the following approach.

Stratified K-Fold Cross Validation. Unfortunately it can be expensive requiring a separate model to be fit for each point in the training data set. This is a very common practice in machine learning.

Therefore having a bunch of different machine learning algorithms logistic regression random forest etc cross-validation lets us evaluate which of these different algorithms is best suited for a particular type of data set. For leave-one-out your validation set consists of a single data point. Then you just divide your data set into training and validation sets and for each of those divisions folds you train a model on the training set and assess its loss function on the validation set.

If the subset of the data that is left out is selected at random then the results may.

Cross Validation Explained Evaluating Estimator Performance By Rahil Shaikh Towards Data Science

A Quick Intro To Leave One Out Cross Validation Loocv

No comments for "Leave-one-out Cross-validation Which Model Performs Best"

Post a Comment